Chapter 25: Jobs

Index

- Introduction

- What is a job?

- Background jobs and the “

&” character - Variable “

$!” - Job management commands

- Summary

- References

Introduction

In Bash, jobs represent the commands or processes that you execute from the shell. These jobs can run either in the foreground, where they take over your terminal until completion, or in the background, where they continue to run while freeing your terminal for other tasks. The ability to manage jobs is a powerful feature of Bash that enhances multitasking and productivity on the command line.

Jobs are particularly useful when you need to run long processes without tying up your terminal. For example, downloading large files, compiling code, or running simulations can be sent to the background, allowing you to continue working without interruption. Bash provides a set of intuitive commands and features to control, monitor, and manage these jobs effectively.

Key to understanding jobs in Bash is learning how to start processes in the background, bring them back to the foreground, stop or resume them, and track their progress. Using operators like “&” to start background processes and commands like “jobs”, “fg”, “bg”, and “kill”, you can interact seamlessly with active processes.

Jobs are more than just convenience—they’re a way to boost efficiency and maximize the potential of your command-line environment. Whether you’re running multiple scripts, monitoring system tasks, or simply trying to multitask, mastering jobs in Bash will give you greater control and flexibility over your workflows.

What is a job?

Each time you execute a command in a Bash script or terminal, it is treated as a job. Think of a “job” as a more abstract concept than an individual process—it represents a unit of work that the shell manages.

As explored in the chapter on I/O Redirections, when you run a command like this.

command1 | command2 | command3

Bash creates three separate processes—one for each command. These processes are linked together, with the standard output of one feeding into the standard input of the next. Despite involving multiple processes, this entire chain is treated as a single job by Bash. This abstraction allows the shell to manage the collective work as a unified task, streamlining control and interaction.

Background jobs and the “&” character

By default, jobs and commands run to completion, blocking the shell that invoked them. For instance, consider the following script.

1 #!/usr/bin/env bash

2 #Script: jobs-0001.sh

3 while true; do

4 echo "Message"

5 sleep 3

6 done

This script contains an endless loop that prints the message “Message” to the terminal (standard output) every three seconds. Upon executing this script, you will observe the following output.

$ ./jobs-0001.sh

Message

Message

Message

^C

In this scenario, the terminal remains blocked, as the script continuously runs without terminating. To stop its execution, you would need to interrupt it manually using Ctrl+C (Which is the character “^C” that appears in the terminal).

However, this behavior can be altered by appending the special character “&” at the end of the command, allowing the script to run in the background and freeing up the terminal for other tasks.

1 #!/usr/bin/env bash

2 #Script: jobs-0002.sh

3 while true; do

4 echo "Message"

5 sleep 3

6 done &

7 # ^^^

Now when you will execute the previous “jobs-0002.sh” script you will get the following in your terminal window.

$ ./jobs-0002.sh

Message

$ Message

Message

Message

...

If you carefully examine the output, you’ll notice two significant changes. First, the terminal is no longer blocked, meaning you can freely use it for other commands. Second, the script continues to print messages to the screen, which can quickly become disruptive and inconvenient as you try to work in the terminal.

I recommend redirecting the standard output of the “while” loop to a file to avoid cluttering the terminal. You can achieve this by applying the redirection techniques we explored in the “While Loop Redirection” section of the “I/O Redirections” chapter. Here’s how it looks.

1 #!/usr/bin/env bash

2 #Script: jobs-0003.sh

3 while true; do

4 echo "Message"

5 sleep 3

6 done >/dev/null &

7 # ^^^^^^^^^^^ ^

Once you execute the “jobs-0003.sh” script your terminal will look like this.

$ ./jobs-0003.sh

You will see that there is no output in your terminal window, but the script is being executed in the background. You can check it by using the command “ps aux“[1] in the same terminal.

What’s happening here? The standard output is now redirected to “/dev/null”, effectively discarding it—since this device acts like a black hole for data. As a result, the terminal is no longer cluttered with output, allowing you to continue using it seamlessly.

Whenever you execute Bash scripts or commands with the “&” character, two key things occur. First, a child process is created by Bash to execute the specified code. Second, this child process is registered in the shell’s table of active jobs, enabling you to manage it as part of the current session.

Variable “$!”

When you send a job to execute in the background using the “&” character, Bash spawns a child process to handle the specified code. This child process is assigned its own unique Process ID (PID).

The special variable “$!” stores the PID of the most recently executed background job, making it easy to reference and manage.

To see this in action, let’s explore an example directly in the terminal.

$ while true; do echo Message; sleep 3; done >/dev/null &

When we hit the Enter key with the previous Bash script we will have something like the following.

$ while true; do echo Message; sleep 3; done >/dev/null &

[1] 569344

When you press Enter, the output displayed includes the job number (1 in this case) and the PID of the newly created process (569344). If you then print the value of the “$!” variable, you’ll notice that it matches the same PID.

$ echo $!

569344

When running the same code inside a Bash script, the PID won’t automatically appear on the screen as it does in the terminal. However, the “$!” variable will still hold the correct value—the PID of the last job sent to execute in the background.

Job management commands

So far, we’ve learned how to send jobs to execute as background processes. However, once they’re running in the background, we haven’t explored how to interact with or manage them effectively. In the following subsections, we’ll delve into the various commands available to help you manage and control the jobs you’ve created.

The “jobs” command

The “jobs” command in Bash is a powerful tool used to monitor and manage background and suspended jobs in your shell session. It provides a list of currently active jobs along with their statuses, such as “Running” or “Stopped.” Each job is assigned a unique job ID, making it easy to reference and control them. Whether you’re juggling multiple tasks or simply curious about what’s running in the background, the “jobs” command is your go-to for keeping track of them.

Let’s say that we execute the following commands in our terminal.

$ while true; do echo Message; sleep 3; done >/dev/null &

[1] 716199

$ while true; do echo Message2; sleep 3; done >/dev/null &

[2] 716229

$ while true; do echo Message3; sleep 3; done >/dev/null &

[3] 716255

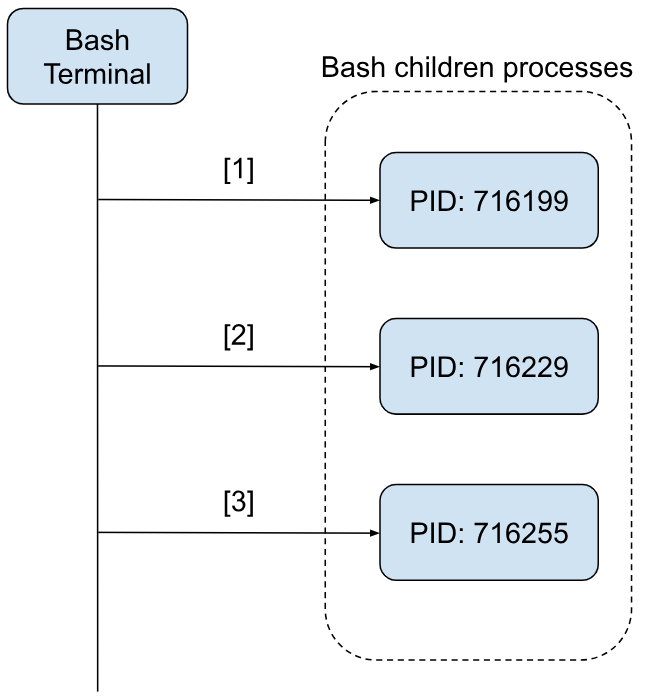

With the previous commands we were able to execute in the background 3 infinite loops that send messages to the device “/dev/null”. We could visualize what is happening in the following way.

To be able to inspect the shell’s table of active jobs we can use the “jobs” command like the following.

$ jobs

[1] Running while true; do echo Message; sleep 3; done > /dev/null &

[2]- Running while true; do echo Message2; sleep 3; done > /dev/null &

[3]+ Running while true; do echo Message3; sleep 3; done > /dev/null &

The “jobs” command comes equipped with the following options.

| Option | Description |

|---|---|

”-l” |

Lists PIDs on top of the information displayed by default |

”-n” |

Lists processes that have changed its status since the last notification |

”-p” |

Lists only PIDs |

”-r” |

List only jobs that are running |

”-s” |

List only jobs that are stopped |

”-x” |

If this option is provided the syntax is as follows “jobs -x COMMAND [args]”. In this case, all the arguments that look like a “job specification” will be replaced with the PID of the referred job. |

You might be wondering what a “job specification” is. In Bash, a job specification is the way the jobs command refers to the processes that make up a job. The syntax for job specifications includes several formats:

- ”

%n”: Refers to the job with the number “n”. - ”

%str”: Refers to the job whose command starts with the string “str”. If multiple jobs start with the same string, it will fail due to ambiguity. - ”

%?str”: Refers to the job with a command containing the string “str”. Like “%str”, it will fail if more than one job matches the criteria. - ”

%%” or “%+”: Refers to the job most recently executed in the background or the job most recently suspended from the foreground. - ”

%-”: Refers to the job that was the most recent before the current one (“%%”).

This system allows you to efficiently target and manage specific jobs using clear and concise references.

Now, we are going to play with the previous options using the following script that will be copied twice. At the end will end up with the following 3 scripts.

1 #!/usr/bin/env bash

2 #Script: 1st_job.sh

3 while true; do

4 echo "Message $0"

5 sleep 3

6 done >/dev/null

and

1 #!/usr/bin/env bash

2 #Script: 2nd_job.sh

3 while true; do

4 echo "Message $0"

5 sleep 3

6 done >/dev/null

and

1 #!/usr/bin/env bash

2 #Script: 3rd_job.sh

3 while true; do

4 echo "Message $0"

5 sleep 3

6 done >/dev/null

As you can see in the scripts the only thing they do is to print to the screen “Message <name_of_script>”.

Now we will execute the 3 scripts on the background.

$ ./1st_job.sh &

[1] 877267

$ ./2nd_job.sh &

[2] 877527

$ ./3rd_job.sh &

[3] 877589

Now we can inspect the table of active jobs with the command “jobs -l”.

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

Take a closer look at the output of the jobs command, and you’ll notice the following details:

- Job Number: Each job is preceded by a unique identifier in square brackets (e.g., “

[1]”, “[2]”, “[3]”). - Special Character:

- A space next to the job number is reserved for a special marker:

- ”

+” : Indicates the most recent job sent to the background. - ”

-” : Represents the second most recent job sent to the background. - (blank) : Assigned to all other jobs.

- ”

- A space next to the job number is reserved for a special marker:

- Process ID (PID): Following the special marker is the PID of the process associated with the job.

- Job State: The current state of the job is displayed, such as Running, Stopped, or Terminated.

- Command: Finally, you see the command that is being executed in the background.

This structured format makes it easier to identify and manage jobs efficiently.

Now we are going to use the command “jobs -p” to show the PIDs of the 3 jobs.

$ jobs -p

877267

877527

877589

“fg” and “bg” commands

When a job is created in Bash, it can run in either the background or the foreground. Background jobs allow the shell to remain available for other tasks, enabling the user to work in parallel while the job executes. In contrast, foreground jobs occupy the shell, blocking it until the job completes.

Bash provides commands to control where a job is executed, giving users the flexibility to switch between the background and the foreground as needed.

One such command is “fg”. The “fg” command retrieves jobs that are currently running or stopped in the background and brings them to the foreground, allowing for direct interaction or observation. Let’s see an example.

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

$ fg %1

<terminal hanging>

At this point, the script is running in the foreground, preventing you from using the current shell. To move this job back to the background, you can use a simple method. Pressing Ctrl+Z sends the current job to the background in a stopped state, effectively pausing its execution until further action is taken.

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

$ fg %1

^Z

[1]+ Stopped ./1st_job.sh

$ jobs -l

[1] 877267 Stopped ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

As shown in the previous output, the first job has been moved to the background but is currently in a stopped state due to the use of Ctrl+Z. How can we resume its execution? This is where the “bg” command proves invaluable.

The “bg” command is designed to manage jobs in the background. If a job is already in the background but paused, using “bg” will resume its execution seamlessly. Let’s explore this in action by continuing with the previous example.

$ jobs -l

[1] 877267 Stopped ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

$ bg %1

[1]+ ./1st_job.sh &

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

Now you can see how the first job (“./1st_job.sh”) which was previously stopped is now running.

For the lazy people

The “fg” command allows us to move a background job to the foreground. If no specific job is indicated using a job specification (“job_spec”), the most recent job (in this case, job 3) is brought to the foreground by default.

However, there’s an even quicker method to achieve this: simply using the “%” symbol. For example.

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

$ %

./3rd_job.sh

<terminal hanging>

In the demonstration above, we use just “%” to effortlessly bring the most recent background job to the foreground, streamlining the process further.

The “disown” command

When a job is initiated in the background using the “&” character, the resulting process remains “attached” to the current shell terminal. This attachment is reflected in the shell’s table of active jobs, which can be inspected using the jobs command.

The downside of having jobs in this table is that if the current interactive terminal receives a “SIGHUP” (hang-up signal), the signal will be forwarded to all jobs listed in the table, potentially terminating them.

To avoid this, Bash provides the “disown” command, which detaches jobs from the current interactive terminal. This ensures that the jobs are no longer affected by signals such as “SIGHUP” sent to the terminal.

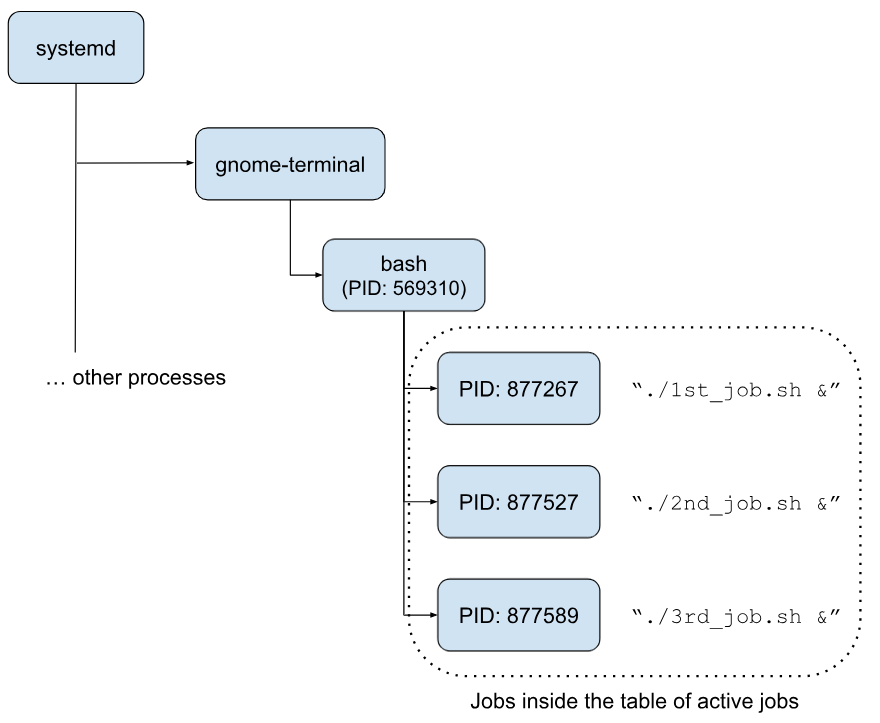

When you launch a Bash terminal, the process created for it is typically a child process of another, such as “init” or “systemd“[2] on Debian-based systems like Ubuntu. For example, in Ubuntu, opening a new terminal running Bash might produce a process hierarchy resembling the following:

systemd ── bash ── job_process

In this structure, “systemd” is the parent process responsible for starting the terminal, with “bash” as its child, and any background jobs initiated by bash become further child processes.

When we inspect the table of active jobs in our terminal you will get something like the following.

$ jobs -l

[1] 877267 Running ./1st_job.sh &

[2]- 877527 Running ./2nd_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

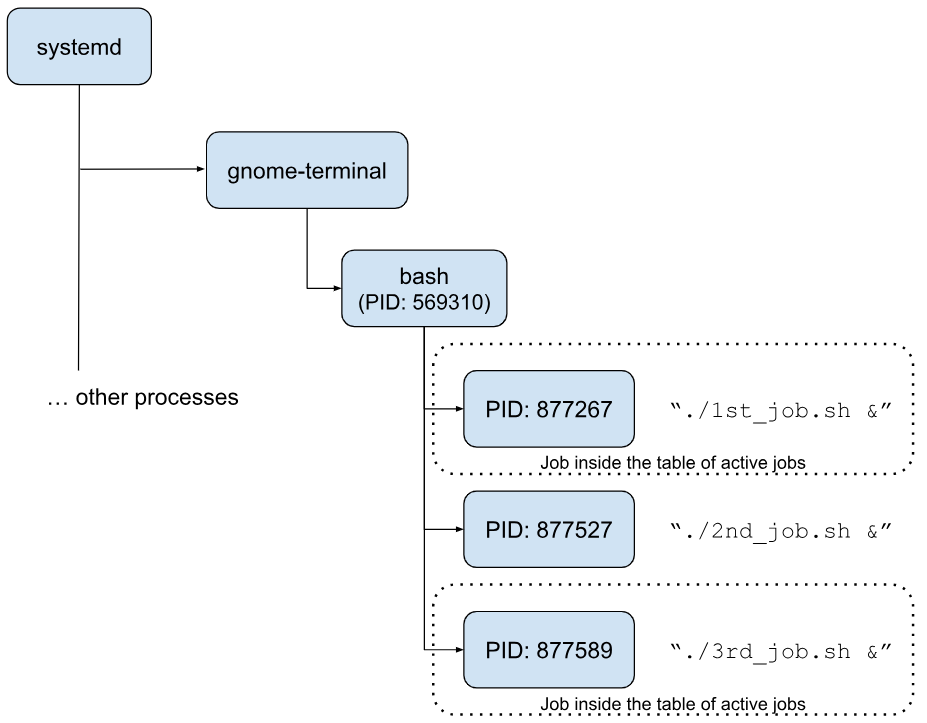

Now… What happens when we run the “disown” command for one of the current jobs? Let’s say we disown job with id 2 (which corresponds to the “2nd_job.sh” script).

$ disown %2

$ jobs -l

[1]- 877267 Running ./1st_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

So the job is gone from the table of active jobs for this terminal. But if we inspect the processes with the command “pstree -p” (option “-p” is to show the PIDs) we get something like the following.

This means that while the job is still linked to the original bash process, it no longer appears in the table of active jobs.

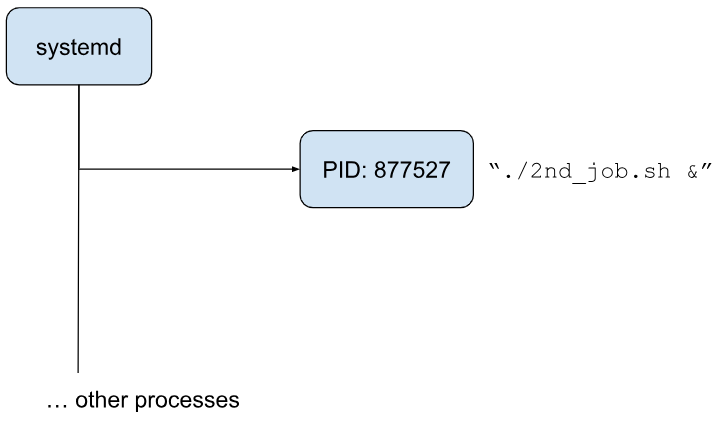

As mentioned earlier, when a “bash” process receives a “SIGHUP” signal, it forwards this signal to all jobs listed in its active jobs table, potentially causing them to terminate. But what about processes that have been removed from this table? Let’s explore this scenario with an example.[3]

$ echo $$

569310

$ jobs -l

[1]- 877267 Running ./1st_job.sh &

[3]+ 877589 Running ./3rd_job.sh &

$ kill -SIGHUP 569310

At this point, the terminal we were using will likely stop functioning or may even close entirely, requiring us to open a new one. In the new terminal, we can inspect the running processes and locate the one associated with the PID assigned to “./2nd_job.sh &” (in this example, the PID is 877527, but it may differ in your case). Once located, you will observe an output similar to the following.

The “wait” command

The “wait” command is used to pause the execution of a script until a specified job (provided as an argument) changes its state or completes execution.

The command follows this syntax.

wait [-fn] [id ...]

If invoked without any arguments (a simple “wait”), it will pause until all background jobs have either finished executing or changed their state.

Wait for all jobs to change status

Let’s explore how the “wait” command works with a practical example. To do this, we’ll use two Bash terminals. In the first terminal, we’ll utilize the previously created scripts (“1st_job.sh”, “2nd_job.sh”, and “3rd_job.sh”) and execute the following commands.

$ ./1st_job.sh &

[1] 1187549

$ ./2nd_job.sh &

[2] 1187549

$ ./3rd_job.sh &

[3] 1187600

$ wait

<terminal hangs>

In the second terminal, we will use the “kill” command to send signals to the previously running jobs. Here’s the sequence of actions.

First, type the following command in the second terminal.

$ kill -SIGSTOP 1187549

This sends the “SIGSTOP” signal to the process with PID 1187549, instructing it to pause execution[4]. If you check the first terminal, you’ll notice no visible change; the “wait” command remains active and appears to be idle, awaiting further events.

Next, execute the following command in the second terminal.

$ kill -SIGSTOP 1187578

This sends the “SIGSTOP” signal to the process with PID 1187578, corresponding to the “2nd_job.sh” script, also pausing its execution. Again, upon inspecting the first terminal, you’ll find that nothing has changed, and the “wait” command continues to wait.

Finally, send the same signal to the third job by running.

$ kill -SIGSTOP 1187600

Now, when you return to the first terminal, you’ll observe updates reflecting the changes. The output will resemble the following.

$ ./1st_job.sh &

[1] 1187549

$ ./2nd_job.sh &

[2] 1187578

$ ./3rd_job.sh &

[3] 1187600

$ wait

[1]+ Stopped ./1st_job.sh

bash: wait: warning: job 1[1187549] stopped

[2]+ Stopped ./2nd_job.sh

bash: wait: warning: job 1[1187549] stopped

bash: wait: warning: job 2[1187578] stopped

[3]+ Stopped ./3rd_job.sh

bash: wait: warning: job 1[1187549] stopped

bash: wait: warning: job 2[1187578] stopped

bash: wait: warning: job 3[1187600] stopped

Once all the specified commands have been stopped, the “wait” command has no further processes to monitor, and control returns to the terminal. However, as long as at least one process is still active—whether running or paused—the “wait” command will continue to block the terminal, awaiting completion or further state changes.

Wait for all jobs to terminate

To ensure the “wait” command blocks the terminal until processes are fully terminated, you can use the “-f” option. Let’s explore this using two terminal windows. In the first terminal, you will resume the execution of the previously stopped processes by running the following command.

$ kill -CONT 1187549 1187578 1187600

$ jobs -l

[1] 1187549 Running ./1st_job.sh &

[2]- 1187578 Running ./2nd_job.sh &

[3]+ 1187600 Running ./3rd_job.sh &

As observed, the processes have resumed execution in the background. Next, run the command “wait -f”, and you will notice that the terminal is once again blocked, waiting for the processes to terminate.

$ jobs -l

[1] 1187549 Running ./1st_job.sh &

[2]- 1187578 Running ./2nd_job.sh &

[3]+ 1187600 Running ./3rd_job.sh &

$ wait -f

<terminal hangs>

Now in the second terminal we will kill the processes one by one and see what happens in the first terminal. We send the signal “SIGKILL” to the first process.

$ kill -KILL 1187549

If you check the first terminal you will see something like the following.

$ jobs -l

[1] 1187549 Running ./1st_job.sh &

[2]- 1187578 Running ./2nd_job.sh &

[3]+ 1187600 Running ./3rd_job.sh &

$ wait -f

[1] Killed ./1st_job.sh

<terminal hangs>

This shows that the “wait” command detected the termination of the first job. However, since other jobs are still running, the command continues to block the terminal. Next, we’ll terminate the second job from the second terminal using the following command.

$ kill -KILL 1187578

When you check the first terminal you will see something like the following.

$ jobs -l

[1] 1187549 Running ./1st_job.sh &

[2]- 1187578 Running ./2nd_job.sh &

[3]+ 1187600 Running ./3rd_job.sh &

$ wait -f

[1] Killed ./1st_job.sh

[2]- Killed ./2nd_job.sh

<terminal hangs>

Just like before, the “wait” command detected the termination of another job but continued to block the terminal as there were still active jobs remaining. Now, let’s terminate the third and final job from the second terminal to observe the outcome in the first terminal. Use the following command to terminate the third job.

$ kill -KILL 1187600

Now, if you take a look to the first terminal you will see something like the following.

$ jobs -l

[1] 1187549 Running ./1st_job.sh &

[2]- 1187578 Running ./2nd_job.sh &

[3]+ 1187600 Running ./3rd_job.sh &

$ wait -f

[1] Killed ./1st_job.sh

[2]- Killed ./2nd_job.sh

[3]+ Killed ./3rd_job.sh

$

In this case, the “wait” command detected that the third and last job was killed (terminated) and as there are no more jobs to wait for it ends its execution.

Wait for any job

Now, imagine a scenario where multiple jobs are running in the background, but you want to wait specifically for the termination of just one of them—whichever completes first. To handle such cases, the “wait” command provides the “-n” option. For example, consider the following background jobs running.

$ jobs -l

[1] 59418 Running ./1st_job.sh &

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$

We will use the command “wait -n” to wait for any of them.

$ jobs -l

[1] 59418 Running ./1st_job.sh &

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$ wait -n

<terminal hangs>

Now, in a second terminal, we run the following command to terminate the execution of the first job.

$ kill -KILL 59418

$

If now you check the first terminal, you will see something like the following.

$ jobs -l

[1] 59418 Running ./1st_job.sh &

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$ wait -n

[1]+ Killed ./1st_job.sh

$

Here you can see that the “wait” command detects the termination of a job (in our case the first job) and stops hanging the terminal.

Wait for a specific job

Until now, we’ve used the “wait” command in a broad sense—either waiting for all background jobs to terminate or change state, or waiting for any one of them to finish. However, the “wait” command offers more granular control, allowing you to specify a particular job to monitor. This can be done using the job specification or the process ID (PID) of the job.

Let’s explore this with an example. Imagine the following jobs are currently running in the background.

$ jobs -l

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$

Now, let’s wait specifically for job number 2 to change its state. To do this, we execute the following command.

$ jobs -l

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$ wait %2

<terminal hangs>

In a second terminal, we’ll pause or stop the execution of job number 2 by running.

$ kill -STOP 59447

$

Returning to the first terminal, you’ll now see the following output.

$ jobs -l

[2]- 59447 Running ./2nd_job.sh &

[3]+ 59475 Running ./3rd_job.sh &

$ wait %2

[2]+ Stopped ./2nd_job.sh

$ jobs -l

[2]+ 59447 Stopped (signal) ./2nd_job.sh

[3]- 59475 Running ./3rd_job.sh &

$

Here, you can observe that the “wait” command detected the state change of job number 2 and unblocked the terminal as a result.

This behavior would remain identical if you had used the job’s PID instead of the job specification.

The “suspend” command

The “suspend” command functions similarly to pressing Ctrl+Z—it pauses or suspends the execution of the current shell, handing control back to its parent process (if one exists).

While it’s technically possible to use “suspend” within a Bash script, it’s generally not considered best practice. This is because, in a script, you have greater control over process management through other commands (such as those we’ve already covered), allowing you to handle processes more precisely. However, there’s nothing stopping you from using “suspend” in a script if you choose to do so.

A more appropriate use case for the suspend command is suspending an active shell session. For instance, imagine you’ve switched to a privileged user like “root” (commonly the administrator user of a machine).

$ echo $$

27444

$ sudo su

[sudo] password for username:

# echo $$

27757

# suspend

[1]+ Stopped sudo su

$ fg

# echo $$

27757

#

In this example, the “suspend” command pauses the “root” shell session and moves it to the background, allowing you to resume working in the unprivileged user shell (with PID 27444). The benefit here is that you can easily return to the “root” shell by running the “fg” command, which brings it back to the foreground.

The “kill” command

The “kill” command is used to send signals to processes or jobs. Its syntax can take the following forms.

kill [-s sigspec | -n signum | -sigspec] pid | jobspec ...

kill -l [sigspec]

As indicated, you can specify the signal to send using different formats: by its name (e.g., “SIGILL”) or its number (e.g., “4”). Both represent the same signal internally.

When a process receives a signal, it reacts in one of several ways depending on the signal type:

- Term: The default action is to terminate the process.

- Ign: The signal is ignored by the process.

- Core: The process is terminated, and a core dump file is generated.

- Stop: The process is paused or stopped.

- Cont: If the process is stopped, it resumes execution.

Below, we’ll present a table listing signal numbers, their corresponding names, and brief descriptions to clarify their roles.

| signum | sigspec | Reaction | Description |

|---|---|---|---|

| 1 | SIGHUP |

Term | Used to report that the user’s terminal is disconnected. Can also be used to restart a process. |

| 2 | SIGINT |

Term | Equivalent to “Ctrl+C”. The process is interrupted and then stopped. The process can, however, ignore this signal. |

| 3 | SIGQUIT |

Core | Equivalent to “SIGINT” but will generate a core dump[5]. |

| 4 | SIGILL |

Core | This signal is sent by the operative system to the process when the process tries to perform a faulty, forbidden, or unknown function. “ILLegal SIGnal”. |

| 5 | SIGTRAP |

Core | This signal is sent to a process when an exception (or trap) occurs. More on this in a later chapter. |

| 6 | SIGABRT |

Core | This signal is typically sent to a process to tell it to abort, to terminate. |

| 7 | SIGBUS |

Core | This signal is sent to a process when the process generates a bus error[6]. |

| 8 | SIGFPE |

Core | This signal is sent to a process when it tries to divide by zero. Floating-Point Exception. |

| 9 | SIGKILL |

Term | This signal forces the process to stop executing immediately. This signal cannot be ignored and the process does not get the chance to do clean-up. |

| 10 | SIGUSR1 |

Term | This signal indicates a user defined condition. |

| 11 | SIGSEGV |

Core | This signal is sent to the process that causes a “segmentation violation” which is when a process tries to access an invalid memory reference. |

| 12 | SIGUSR2 |

Term | Similar to “SIGUSR1” |

| 13 | SIGPIPE |

Term | This signal is sent to a process that tries to write to a pipe that does not have any readers. |

| 14 | SIGALRM |

Term | This signal is sent to a process when a timer expires. |

| 15 | SIGTERM |

Term | The sender of the signal is requesting the process receiving this signal to stop. The process is given the opportunity to shutdown gracefully (save current status, release resources, etc). This signal can be ignored. |

| 16 | SIGSTKFLT |

Term | (unused) |

| 17 | SIGCHLD |

Ign | When a child process[7] terminates its execution, the signal “SIGCHLD” is sent to the parent process. |

| 18 | SIGCONT |

Cont | If the process receiving this signal is stopped, it will resume its execution. |

| 19 | SIGSTOP |

Stop | The process receiving this signal will stop (pause) its execution. This signal cannot be ignored. |

| 20 | SIGTSTP |

Stop | This signal is the equivalent of pressing Ctrl+Z in a terminal window. The terminal receiving this signal will ask the process executing in it to stop temporarily. The process can ignore the request. |

| 21 | SIGTTIN |

Stop | This signal is received by a process that tries to read from a computer terminal. |

| 22 | SIGTTOU |

Stop | This signal is received by a process that tries to write to a computer terminal. |

| 23 | SIGURG |

Ign | Urgent condition on socket has occurred. |

| 24 | SIGXCPU |

Core | When a process uses more than the CPU allotted time, the operative system sends the process this signal. This signal acts like a warning; the process has time to save the progress (if possible) and close before the system kills the process with SIGKILL. |

| 25 | SIGXFSZ |

Core | This signal is sent to a process that tries to create a file bigger than what the file system allows. |

| 26 | SIGVTALRM |

Term | When the CPU time used by the process elapses, this signal is sent to the process. |

| 27 | SIGPROF |

Term | Sent when a profiling timer expired |

| 28 | SIGWINCH |

Ign | Window resize signal |

| 29 | SIGIO |

Term | This signal is sent to the process to announce that the process can receive data. |

| 30 | SIGPWR |

Term | When a power failure happens to a system, this signal will be sent to processes, if the system is still on. |

| 31 | SIGSYS |

Core | When a process gives a system call an invalid parameter, the process will receive this signal. |

In addition to the standard signals, there are also real-time signals. Depending on the operating system, these signals typically begin at number 34 or 35, known as “SIGRTMIN,” and extend up to signal 64, referred to as “SIGRTMAX.” The signals in this range are sequentially numbered and represented with suffixes, as shown below:

- “

SIGRTMIN” - “

SIGRTMIN+1” - “

SIGRTMIN+2” - …

- “

SIGRTMIN+15” - “

SIGRTMAX-14” - “

SIGRTMAX-13” - …

- “

SIGRTMAX-1” - “

SIGRTMAX”

We won’t delve further into these signals here, but it’s important to know they exist.

Core dump

As previously mentioned, a core dump is a file that captures the state of a process’s memory at the moment it receives a specific signal. This file is invaluable for diagnosing and investigating the cause of a program’s failure.

In Debian-based systems, such as Ubuntu and Mint, you need to install the “systemd-coredump“[8] package to work with core dumps. Once this package is installed, any generated core dumps will be stored in the “/var/lib/systemd/coredump/” directory.

Now, let’s create a core dump. Start by running any program, such as a text editor or a Bash script, and then send it a signal that triggers a core dump—for instance, the “SIGABRT” signal.

For example, we will start in the background the script “1st_job.sh” that we already used.

$ ./1st_job.sh

[1] 192308

$ jobs -l

[1]+ 192308 Running ./1st_job.sh &

$

Now, we send the signal “SIGABRT” to the job by using either the PID or the job specification.

$ ./1st_job.sh

[1] 192308

$ jobs -l

[1]+ 192308 Running ./1st_job.sh &

$ kill -SIGABRT 192308

$ jobs -l

[1]+ 192308 Aborted (core dumped) ./1st_job.sh

$

Once the jobs have been aborted, you will find the core dump file in the folder “/var/lib/systemd/coredump/”[9].

When you check the directory “/var/lib/systemd/coredump/” you will see a file that was created with the following name: “core.bash.1000.07773a8f89ce4667a3c8af364e9b59d7.192308.1733724572000000.zst“[10].

The core dump file name format in this case follows a structured pattern that encodes information about the process and environment where the dump occurred. Here’s a breakdown of the components of your example file name:

- “

core”: Indicates that the file is a core dump. - “

bash”: The name of the executable or process that generated the core dump (in this case, the Bash shell). - “

1000”: The user ID (UID) of the process owner. In this case, it’s 1000, which is typically the UID for the first non-root user on many Linux systems. - “

07773a8f89ce4667a3c8af364e9b59d7”: A unique identifier, such as a hash, associated with the executable or process. This ensures the file is distinct for processes of the same name. - “

192308”: The process ID (PID) of the dumped process. - “

1733724572000000”: A timestamp, typically in nanoseconds, representing when the core dump was generated. - “

zst”: The file extension, indicating that the core dump has been compressed using the Zstandard (zstd) compression algorithm.

This structured format allows for easy identification and organization of core dumps, especially when dealing with multiple processes or users.

The “killall” command

The “killall” command functions similarly to the “kill” command, as both send signals to processes. However, while “kill” targets processes by their PIDs or job specifications, “killall” selects processes by their program names.

Let’s illustrate this with an example. Imagine you open the text editor “gedit”. To terminate it using “killall”, you would run the following command in the terminal:

$ killall gedit

This will immediately close the “gedit” window.

By default, “killall” sends the “SIGTERM” signal to terminate processes gracefully. If you wish to send a different signal, you can specify it using the “-s” option followed by the signal’s name. For example, to send the “SIGALRM” signal to “gedit”, you would use:

$ killall -s SIGALRM gedit

Here are some additional options available with the “killall” command:

| Option | Description |

|---|---|

-e / --exact |

Require exact match for very long names |

-I / --ignore-case |

Do a match of process names with case insensitivity |

-i / --interactive |

Ask for confirmation before killing a process |

-w / --wait |

Wait for all processes that will be killed to die. The command will check, once per second, if any of the processes to be killed is still alive and will return the execution when all of them are dead. |

This is not an exhaustive list of options. The “killall” command offers more functionality, and I encourage you to explore its full capabilities using the “man” command[11], a valuable resource for diving deeper into any Linux command.

The “nohup” command

One important signal to understand is “SIGHUP”. As we learned earlier, this signal not only notifies processes of a terminal disconnect but can also be used to restart a process.

Simulating “SIGHUP” Without the “kill” Command

You can trigger a “SIGHUP” signal without using the “kill” command by following these steps:

- Open a terminal and check the status of the “

huponexit” shell option. If it’s not enabled, turn it on with:

$ shopt -s huponexit

- Start a background job and then exit the terminal. When you close the terminal, a “

SIGHUP” signal will be sent to all running jobs, causing them to terminate by default.

This behavior means that if “huponexit” is enabled, you need to keep your terminal open to ensure your scripts or jobs continue running. However, there are scenarios where keeping the terminal open is impractical, and you may not have permission to modify shell options.

Addressing the “SIGHUP” Signal for Long-Running Processes

Let’s consider a common scenario: you connect to a remote server to execute a long-running process. Here are two approaches to handle this:

-

Default Approach: Connect to the server, start your script, and hope the connection remains stable. If the server disconnects due to inactivity or other issues, a “

SIGHUP” signal will terminate your jobs. -

Using “

nohup“: A better solution is to use the “nohup” command. This shields your process from “SIGHUP”, allowing it to continue running even after you disconnect or close the terminal.

Example: Using “nohup” for Long-Running Processes

Let’s walk through an example where we connect to a remote machine and use “nohup” to ensure a script keeps running:

- Connect to the Remote Machine: Use the following command to log in to the remote server:[12]

$ ssh teacher@192.168.178.73

ssh teacher@192.168.178.73's password:

...

teacher@course:~$

- Check the Shell Options: Inspect the current shell options using “

shopt”:

$ shopt

autocd off

assoc_expand_once off

cdable_vars off

...

huponexit off <<<<<<<<<<<<

...

$

- Enable “

huponexit” (Optional): Turn on the “huponexit” option to simulate terminal behavior:

$ shopt -s huponexit

$ shopt

huponexit on <<<<<<<<<<<<

- Run a Job with “

nohup“: Start a long-running script using “nohup”:

$ nohup ./1st_job.sh &

[1] 2750

$

-

Log Out of the Remote Session: When you log out, the job receives the “

SIGHUP” signal. However, because it was started with “nohup”, it is protected and continues running. -

Verify the Process: Reconnect to the remote machine and check the running processes using “

ps aux”. You will find that the job with PID2750is still active, confirming that it was unaffected by the terminal disconnect.

Using “nohup” provides peace of mind, ensuring your critical tasks run uninterrupted even in challenging environments.

Summary

The concept of jobs in Unix-like systems, particularly when working with Bash, is central to managing processes within a shell session. A job is any process initiated by the shell, which can run in either the foreground or background. Foreground jobs occupy the terminal until they finish, while background jobs, started with the “&” character, allow you to continue using the terminal while they execute in parallel. The “$!” variable provides the PID of the most recently started background job, making it easier to track and manage. Commands like “jobs”, “fg”, and “bg” are indispensable for listing active jobs, resuming paused ones, or moving background jobs to the foreground. These utilities simplify multitasking, enabling efficient management of complex workflows.

Signals are a fundamental mechanism for interacting with jobs and processes. Signals such as “SIGTERM”, “SIGSTOP”, and “SIGHUP” allow you to terminate, pause, or notify processes of terminal disconnections. The “kill” command sends signals to specific processes using their PIDs or job specifications, while “killall” sends signals to all processes with a matching program name. This flexibility is enhanced by the ability to specify signals by name (e.g., “SIGKILL”) or by their numeric equivalent, with the outcome determined by either the process’s default behavior or custom signal handling.

Several advanced commands further refine job management. The “wait” command suspends the shell until specified jobs complete or change state, with options to wait for all jobs, any job, or specific PIDs. The “nohup” command protects processes from the “SIGHUP” signal, ensuring they persist even if the terminal session ends—particularly useful for long-running tasks on remote servers prone to disconnections. Similarly, the “disown” command removes a job from the shell’s job table, effectively detaching it so it won’t receive signals like SIGHUP when the shell exits.

The shell option “huponexit” determines whether jobs receive a “SIGHUP” signal when the shell terminates. When enabled, this option sends “SIGHUP” to all active jobs, terminating them unless they are protected by “nohup” or “disown”. This behavior is useful for cleaning up resources but can be undesirable for processes that need to continue running after the shell exits. The “suspend” command adds another layer of control, allowing a shell or process to be paused and resumed later, which can be particularly handy in scenarios requiring temporary suspensions.

Debugging processes often involves creating core dumps, which capture the state of a process when it receives certain signals, such as “SIGABRT”. These dumps are invaluable for diagnosing crashes and investigating failures. By combining tools like “kill”, “wait”, “nohup”, “disown”, and the use of background jobs with “&”, Unix-like environments offer a robust and versatile framework for managing processes. This makes them ideal for multitasking, remote work, and developing reliable applications.

“Knowledge is power.” – Francis Bacon

The ability to control and manipulate jobs equips you with the power to harness your system’s full potential.

References

- http://mywiki.wooledge.org/BashGuide/JobControl#jobspec

- http://web.mit.edu/gnu/doc/html/features_5.html

- https://bytexd.com/bash-wait-command/

- https://copyconstruct.medium.com/bash-job-control-4a36da3e4aa7

- https://devhints.io/bash

- https://javarevisited.blogspot.com/2011/06/special-bash-parameters-in-script-linux.html#axzz7guKvWQtW

- https://linuxize.com/post/bash-wait/

- https://ostechnix.com/suspend-process-resume-later-linux/

- https://serverfault.com/questions/205432/how-do-i-reclaim-a-disownd-process

- https://tldp.org/LDP/abs/html/x9644.html

- https://unix.stackexchange.com/questions/3886/difference-between-nohup-disown-and

- https://unix.stackexchange.com/questions/4034/how-can-i-disown-a-running-process-and-associate-it-to-a-new-screen-shell

- https://unix.stackexchange.com/questions/85021/in-bash-scripting-whats-the-meaning-of

- https://www.baeldung.com/linux/jobs-job-control-bash

- https://www.cyberciti.biz/faq/unix-linux-jobs-command-examples-usage-syntax/

- https://www.gnu.org/software/bash/manual/html_node/Job-Control-Basics.html

- https://www.gnu.org/software/bash/manual/html_node/Signals.html

- https://www.linuxjournal.com/content/job-control-bash-feature-you-only-think-you-dont-need

- https://www.reddit.com/r/bash/comments/rr4apy/jobs_x_command/

1. Check https://man7.org/linux/man-pages/man1/ps.1.html for more information.↩

2. “systemd” is the parent process of most of the processes in the operating system. You can inspect the hierarchy of processes in the operating system with the command “pstree”.↩

3. We will talk about the "kill" later in the chapter.↩

4. Stopping the execution of a process is different from terminating the execution. When a process is “stopped” the process is still in memory but just paused. When a process is “terminated” the program itself is deallocated from the memory.↩

5. A “core dump” is a file that contains the state of the memory of the process at the time of receiving the signal. This tends to be very useful to do investigations on why a program failed.↩

6. A “bus error” is a signal raised by hardware, notifying the operating system that a process is trying to access memory that the CPU cannot physically address.↩

7. A “child process” is a process that has been started by another process. This second process is known as the “parent process”.↩

8. In Ubuntu you can install the package using the command “aptitude install systemd-coredump”.↩

9. If you have installed the package “systemd-coredump” in your system.↩

10. The name of the core dump file will most likely be different in your system.↩

11. Executing the command “man killall” will give you access to all the options. Just play with different options and experiment.↩

12. You can connect to a remote server of your own. The server I am using in this example is a virtual machine inside my local environment that contains a Debian distribution installed. ↩